Local Applications of Multi-microphone Alexa Style Hardware

I have made a couple of simple demo/experiments, Not requiring an online service subscription, as Alexa, Siri, etc. require.

Two experiments were carried out:

While it is an evolutionary step back in technology relying on your own processor, instead of the optimized supercomputer systems that MAKE Alexa seem so real.

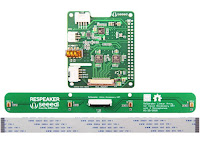

I have been working with the SeeedStudio Respeaker 4 Mic Linear Array Hat for the Raspberry Pi, they currently offer about 6 different models, including 2 standalone arrays using different software.

I setup the Seeed offered solution for Alexa, less the actual Alexa interface, to see where I could go from there, this has set up a chain of functions connected by Python pipeline code from the Voice-Engine Python code library that links various function libraries into a signal pipeline, here's the configuration

The Library: github.com/voice-engine/voice-engine

Additional Tutorial code: github.com/voice-engine/make-a-smart-speaker

Voice-Engine sees to connecting libraries you have installed from various sources (a fairly painless process)

SnowBoy: Provides "Wake Word" (or phrase) spotting, it knows a few voice interface system names.

snowboy.kitt.ai github.com/Kitt-AI/snowboy

Watching out for your Wake Word uses about 5% of the processor power of a raspberry pi. I understand you can watch for more than one word, but they may have to be "Universal" keywords that have been trained by >500 different voice samples.

Their site provides a mechanism for submitting a new keyword for encoding, but it is likely to only work reliably with your own voice, it should be recorded with the actual microphone used in your final application.

This is a business (founded by 4 PhDs) that has commercial use licensing requirements, the limitations that appear to apply to self recorded keywords cold be an artificial obstacle.

I noticed keywords like "Computer" have nearly 500 sample submissions, where they should be promoted to Universal keyword status.

I found the speaker independent keyword response to be quite reliable, good for 25+ feet in quiet room. This software setup does not focus on the voice source to exclude other sound sources as more sophisticated Beam-forming techniques can.

Direction of arrival detection outputs an angle, the linear array is good for about +/- 90 degrees.

It was fairly straightforward to drive an RC hobby servo with a raspberry pi, using one of the 2 PWM hardware pins available. Setup with a 50 ms pulse window, the full servo range is covered by about 1 to 2 ms of pulse range, this amplifies the already imperfect timing of the Pi's PWM output, causing some intermittent hunting vibration when holding a position.

I choose to just turn of PWM when the servo is static, to cut down interference with the microphones, this results in just friction holding the position, where normally the motor exerts considerable holding force.

Although the AES code is called, there is no actual speaker signal in this test.

Noise Suppression was disappointing, comparing response with or without Suppression turned on as well as briefly comparing recording, found no real effect in my test environment. (this is a hardware independent function, though it changes algorithms for a higher sample rate signal)

Seedstudio only provides a complete solution for Alexa/Siri, they suggest using a university project, ODAS to experiment with other applications their sales literature describes. It is all compiled in C code making it compatible with small processors used in their robots, unlike the hodgepodge of Linux code the Voice-engine ends up using. While the author has plans for a release version with better docs and easier to use code, it appears to have been in beta limbo for more than 8 months now, (he is working on it in his spare time) until the earlier code too challenging for me to play with.

Source code: github.com/introlab/oda Thesis: tinyurl.com/odasthesis (French Canadian)

DemoVideo: tinyurl.com/odas-video

See two complete speech response packages, that have several example applications, in:

More (Local) Self-Contained Internet-Free Voice Response Solutions

Two experiments were carried out:

- Direction of arrival used to point a servo to the speaker of a Wake Word.

- Real time text to speech detection, with a 16,000 Word vocabulary.

While it is an evolutionary step back in technology relying on your own processor, instead of the optimized supercomputer systems that MAKE Alexa seem so real.

I have been working with the SeeedStudio Respeaker 4 Mic Linear Array Hat for the Raspberry Pi, they currently offer about 6 different models, including 2 standalone arrays using different software.

Direction of Arrival Speaker Location Tracking

I setup the Seeed offered solution for Alexa, less the actual Alexa interface, to see where I could go from there, this has set up a chain of functions connected by Python pipeline code from the Voice-Engine Python code library that links various function libraries into a signal pipeline, here's the configuration

Source(alsa)-->AEC-->NS --> KWS |VDOA--> Servo

The Respeaker Hat drivers deliver the Source through the Linux ALSA sound framework. AES-Acoustic Echo Surpression: used to cancel out ths voice or music tht may be playing out of an Alexa speaker, otherwise some push to talk button like on a CB radio would be needed.theRespeaker has a speaker signal input ADC that enables AES calculations.

NS=Noise Suppression: may cut out some periodic noise. KWS=Keyword Spotting: this is typically the single "Wake Word" detected locally causing the voice to then be shipped off to the Alexa processor. DOA=Direction of arrival: the linear array can estimate the angle of the soundsource by comparing arrival times of each mic. Servo is a bit of code I added to point an RC Servo at the sound source, instead of using an LED ring as an indicator. The Library: github.com/voice-engine/voice-engine

Additional Tutorial code: github.com/voice-engine/make-a-smart-speaker

Voice-Engine sees to connecting libraries you have installed from various sources (a fairly painless process)

SnowBoy: Provides "Wake Word" (or phrase) spotting, it knows a few voice interface system names.

snowboy.kitt.ai github.com/Kitt-AI/snowboy

Watching out for your Wake Word uses about 5% of the processor power of a raspberry pi. I understand you can watch for more than one word, but they may have to be "Universal" keywords that have been trained by >500 different voice samples.

Their site provides a mechanism for submitting a new keyword for encoding, but it is likely to only work reliably with your own voice, it should be recorded with the actual microphone used in your final application.

This is a business (founded by 4 PhDs) that has commercial use licensing requirements, the limitations that appear to apply to self recorded keywords cold be an artificial obstacle.

I noticed keywords like "Computer" have nearly 500 sample submissions, where they should be promoted to Universal keyword status.

I found the speaker independent keyword response to be quite reliable, good for 25+ feet in quiet room. This software setup does not focus on the voice source to exclude other sound sources as more sophisticated Beam-forming techniques can.

Direction of arrival detection outputs an angle, the linear array is good for about +/- 90 degrees.

It was fairly straightforward to drive an RC hobby servo with a raspberry pi, using one of the 2 PWM hardware pins available. Setup with a 50 ms pulse window, the full servo range is covered by about 1 to 2 ms of pulse range, this amplifies the already imperfect timing of the Pi's PWM output, causing some intermittent hunting vibration when holding a position.

I choose to just turn of PWM when the servo is static, to cut down interference with the microphones, this results in just friction holding the position, where normally the motor exerts considerable holding force.

Although the AES code is called, there is no actual speaker signal in this test.

Noise Suppression was disappointing, comparing response with or without Suppression turned on as well as briefly comparing recording, found no real effect in my test environment. (this is a hardware independent function, though it changes algorithms for a higher sample rate signal)

--------

A side note:

While testing speech to text running the Pi from a USB backup battery, I noticed the performance, was degraded, after having seen a low voltage message and getting the lightning bolt (pointing down) in the upper right of the screen, I assume the processor is throttled back under low voltage conditions, I checked some status parameters related to clock speed and voltages but found no difference, I didn't check the power LED drive, which is said to be dimmed under low supply conditions.

The voltage out of a couple of charged up USB batteries I checked was about 4.85V (within the +/- 5% spec. I have seen stated (one published spec mentioned a 5.1V standard supply), my line powered supply output 5.15V, seems odd performance would drop with just a 0.3 volt difference, I will have to recheck this more closely.

--------

More Experimental Code with Advanced Functionality

Real Time Speech to Text Detection, with no internet access

An easier to use package I ran across is by zamia-speech.org a company that has put together their own per-trained speech

'dictionaries' (ASR Models) with open source code, they support Pocketsphinx and Kaldi, the latter is used in my experiment, the ASR Model used here has some 160,000 words.

Their

Raspberry Pi compatible library is based on the same vocabulary, but

has been simplified to reduce computing power requirements, the results

are not as noise tolerant that a more powerful PC wold provide. While it

is a pre-trained, speaker independent system, you do need to

annunciation a bit more clearly than normal, in a low noise environment

to get a low error rate. Carefully pointing the mics at my TV set would

get about 80% accuracy with speech isolated from background music,

etc., with more noisy conversion it cold get every word wrong.

Kaldi appears to use 2 pass solution?, the demo ver. 0.2 (which is as far as I got)

shows the word guesses of the left of the cursor, it fouled up when

continuous speech reached the right edge of the window, I made test

samples with silence breaks to work around the demo the text formatting choke,

(the engine is capable of decoding continuous speech without breaks)

A side note:

While testing speech to text running the Pi from a USB backup battery, I noticed the performance, was degraded, after having seen a low voltage message and getting the lightning bolt (pointing down) in the upper right of the screen, I assume the processor is throttled back under low voltage conditions, I checked some status parameters related to clock speed and voltages but found no difference, I didn't check the power LED drive, which is said to be dimmed under low supply conditions.

The voltage out of a couple of charged up USB batteries I checked was about 4.85V (within the +/- 5% spec. I have seen stated (one published spec mentioned a 5.1V standard supply), my line powered supply output 5.15V, seems odd performance would drop with just a 0.3 volt difference, I will have to recheck this more closely.

--------

More Experimental Code with Advanced Functionality

Seedstudio only provides a complete solution for Alexa/Siri, they suggest using a university project, ODAS to experiment with other applications their sales literature describes. It is all compiled in C code making it compatible with small processors used in their robots, unlike the hodgepodge of Linux code the Voice-engine ends up using. While the author has plans for a release version with better docs and easier to use code, it appears to have been in beta limbo for more than 8 months now, (he is working on it in his spare time) until the earlier code too challenging for me to play with.

Source code: github.com/introlab/oda Thesis: tinyurl.com/odasthesis (French Canadian)

DemoVideo: tinyurl.com/odas-video

See two complete speech response packages, that have several example applications, in:

More (Local) Self-Contained Internet-Free Voice Response Solutions

Comments

Post a Comment